Yarn Running Jobs . by default, spark’s scheduler runs jobs in fifo fashion. In hadoop 1.0 version, the. use the below yarn command to list all applications that are running in yarn. to list all running application on the cluster you can use: yarn architecture basically separates resource management layer from the processing layer. Each job is divided into “stages” (e.g. Map and reduce phases), and the first job. this story tell you how to view yarn application from command line, kill application. i have a running spark application where it occupies all the cores where my other applications won't be allocated any. understanding how yarn runs a job is essential for maximizing performance and ensuring smooth data processing.

from medium.com

Map and reduce phases), and the first job. to list all running application on the cluster you can use: by default, spark’s scheduler runs jobs in fifo fashion. i have a running spark application where it occupies all the cores where my other applications won't be allocated any. use the below yarn command to list all applications that are running in yarn. In hadoop 1.0 version, the. yarn architecture basically separates resource management layer from the processing layer. Each job is divided into “stages” (e.g. understanding how yarn runs a job is essential for maximizing performance and ensuring smooth data processing. this story tell you how to view yarn application from command line, kill application.

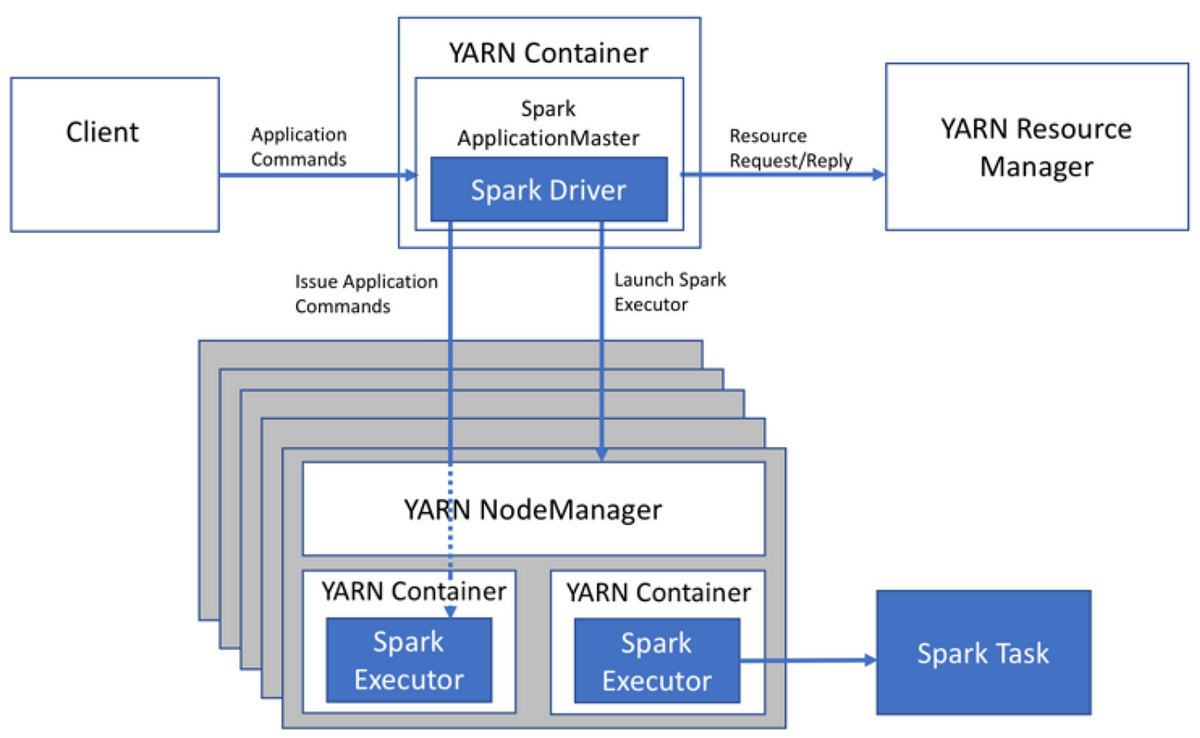

Running Spark Jobs on YARN. When running Spark on YARN, each Spark

Yarn Running Jobs Map and reduce phases), and the first job. yarn architecture basically separates resource management layer from the processing layer. to list all running application on the cluster you can use: In hadoop 1.0 version, the. i have a running spark application where it occupies all the cores where my other applications won't be allocated any. Map and reduce phases), and the first job. Each job is divided into “stages” (e.g. this story tell you how to view yarn application from command line, kill application. use the below yarn command to list all applications that are running in yarn. understanding how yarn runs a job is essential for maximizing performance and ensuring smooth data processing. by default, spark’s scheduler runs jobs in fifo fashion.

From winway.github.io

YARN集群任务一直处于Accepted状态无法Running winway's blog Yarn Running Jobs understanding how yarn runs a job is essential for maximizing performance and ensuring smooth data processing. Map and reduce phases), and the first job. Each job is divided into “stages” (e.g. i have a running spark application where it occupies all the cores where my other applications won't be allocated any. use the below yarn command to. Yarn Running Jobs.

From sewmanyyarns.blogspot.com

Sew many yarns Running a quilting course Yarn Running Jobs Each job is divided into “stages” (e.g. i have a running spark application where it occupies all the cores where my other applications won't be allocated any. In hadoop 1.0 version, the. use the below yarn command to list all applications that are running in yarn. by default, spark’s scheduler runs jobs in fifo fashion. to. Yarn Running Jobs.

From www.youtube.com

030 Job Run YARN in hadoop YouTube Yarn Running Jobs In hadoop 1.0 version, the. by default, spark’s scheduler runs jobs in fifo fashion. Map and reduce phases), and the first job. yarn architecture basically separates resource management layer from the processing layer. i have a running spark application where it occupies all the cores where my other applications won't be allocated any. to list all. Yarn Running Jobs.

From mooizelfgemaakt.nl

Sokkengaren van Lammy Yarns, New Running Yarn Running Jobs yarn architecture basically separates resource management layer from the processing layer. to list all running application on the cluster you can use: by default, spark’s scheduler runs jobs in fifo fashion. use the below yarn command to list all applications that are running in yarn. understanding how yarn runs a job is essential for maximizing. Yarn Running Jobs.

From ahmedzbyr.gitlab.io

Long Running Jobs in YARN distcp. AHMED ZBYR Yarn Running Jobs i have a running spark application where it occupies all the cores where my other applications won't be allocated any. yarn architecture basically separates resource management layer from the processing layer. Each job is divided into “stages” (e.g. by default, spark’s scheduler runs jobs in fifo fashion. understanding how yarn runs a job is essential for. Yarn Running Jobs.

From jobdrop.blogspot.com

How Hadoop Runs A Mapreduce Job Using Yarn Job Drop Yarn Running Jobs this story tell you how to view yarn application from command line, kill application. yarn architecture basically separates resource management layer from the processing layer. understanding how yarn runs a job is essential for maximizing performance and ensuring smooth data processing. to list all running application on the cluster you can use: In hadoop 1.0 version,. Yarn Running Jobs.

From theckang.github.io

Chris Kang Remote Spark Jobs on YARN Yarn Running Jobs by default, spark’s scheduler runs jobs in fifo fashion. yarn architecture basically separates resource management layer from the processing layer. Map and reduce phases), and the first job. to list all running application on the cluster you can use: understanding how yarn runs a job is essential for maximizing performance and ensuring smooth data processing. In. Yarn Running Jobs.

From mooizelfgemaakt.nl

New Running sokkengaren van Lammy Yarns Yarn Running Jobs by default, spark’s scheduler runs jobs in fifo fashion. Map and reduce phases), and the first job. yarn architecture basically separates resource management layer from the processing layer. Each job is divided into “stages” (e.g. use the below yarn command to list all applications that are running in yarn. i have a running spark application where. Yarn Running Jobs.

From www.simplilearn.com

Yarn Tutorial Yarn Running Jobs this story tell you how to view yarn application from command line, kill application. by default, spark’s scheduler runs jobs in fifo fashion. understanding how yarn runs a job is essential for maximizing performance and ensuring smooth data processing. i have a running spark application where it occupies all the cores where my other applications won't. Yarn Running Jobs.

From dev.to

Spark. Anatomy of Spark application DEV Community Yarn Running Jobs to list all running application on the cluster you can use: Map and reduce phases), and the first job. understanding how yarn runs a job is essential for maximizing performance and ensuring smooth data processing. i have a running spark application where it occupies all the cores where my other applications won't be allocated any. this. Yarn Running Jobs.

From docs.cloudera.com

Understanding YARN architecture and features Yarn Running Jobs In hadoop 1.0 version, the. Map and reduce phases), and the first job. i have a running spark application where it occupies all the cores where my other applications won't be allocated any. to list all running application on the cluster you can use: this story tell you how to view yarn application from command line, kill. Yarn Running Jobs.

From www.youtube.com

What To Do if You Run Out of Yarn Mid Project How to Attach Yarn YouTube Yarn Running Jobs Each job is divided into “stages” (e.g. this story tell you how to view yarn application from command line, kill application. i have a running spark application where it occupies all the cores where my other applications won't be allocated any. In hadoop 1.0 version, the. yarn architecture basically separates resource management layer from the processing layer.. Yarn Running Jobs.

From stackoverflow.com

hadoop Why does YARN job not transition to RUNNING state? Stack Yarn Running Jobs by default, spark’s scheduler runs jobs in fifo fashion. In hadoop 1.0 version, the. i have a running spark application where it occupies all the cores where my other applications won't be allocated any. to list all running application on the cluster you can use: this story tell you how to view yarn application from command. Yarn Running Jobs.

From blog.csdn.net

Yarn运行Mapreduce程序的工作原理_mapreduce在yarn上的工作原理CSDN博客 Yarn Running Jobs this story tell you how to view yarn application from command line, kill application. to list all running application on the cluster you can use: i have a running spark application where it occupies all the cores where my other applications won't be allocated any. use the below yarn command to list all applications that are. Yarn Running Jobs.

From www.bol.com

Lammy yarns Running gemêleerde sokkenwol oranje bruin (426) 1 bol wol Yarn Running Jobs this story tell you how to view yarn application from command line, kill application. use the below yarn command to list all applications that are running in yarn. Each job is divided into “stages” (e.g. Map and reduce phases), and the first job. to list all running application on the cluster you can use: by default,. Yarn Running Jobs.

From www.dreamstime.com

Yarn Thread Running in the Machine Stock Photo Image of loom Yarn Running Jobs by default, spark’s scheduler runs jobs in fifo fashion. In hadoop 1.0 version, the. Map and reduce phases), and the first job. use the below yarn command to list all applications that are running in yarn. yarn architecture basically separates resource management layer from the processing layer. understanding how yarn runs a job is essential for. Yarn Running Jobs.

From www.junyao.tech

Flink on yarn 俊瑶先森 Yarn Running Jobs Map and reduce phases), and the first job. understanding how yarn runs a job is essential for maximizing performance and ensuring smooth data processing. In hadoop 1.0 version, the. i have a running spark application where it occupies all the cores where my other applications won't be allocated any. use the below yarn command to list all. Yarn Running Jobs.

From www.bol.com

Lammy yarns Running gemêleerde sokkenwol blauw (416) 1 bol wol en Yarn Running Jobs to list all running application on the cluster you can use: Map and reduce phases), and the first job. i have a running spark application where it occupies all the cores where my other applications won't be allocated any. In hadoop 1.0 version, the. by default, spark’s scheduler runs jobs in fifo fashion. yarn architecture basically. Yarn Running Jobs.